Can we trust the Wizard? Interpreting Knowledge-Grounded Dialogue Systems

Abstract

Knowledge-grounded dialogue systems tend to generate responses based on information provided in grounding corpora. Though there has been recent progress in training end-to-end informative systems that mimic human language at the linguistic level, yet there are no controls available that ensure they are truthful. Everyday, such systems help educate users about a particular topic through conversational multi-turn interaction. This research focuses on interpreting these generative neural dialogue models, and to ensure that responses stay ‘faithful’ to information from a text document in a conversation.

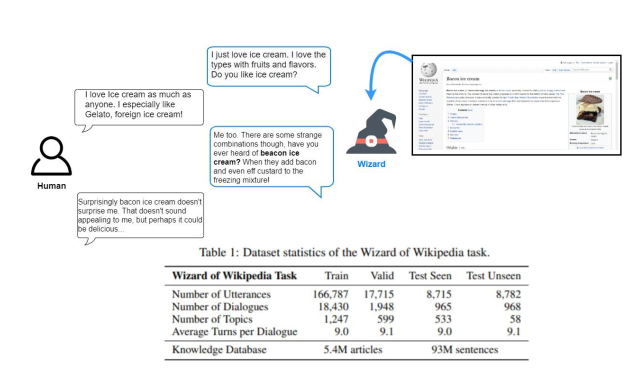

The problem is approached using fine tuned GPT-2 and T5 neural dialogue models that produce a dialogue response using a piece of evidence from a grounding document and a previous conversation history as input (as in Figure 1). Compared with previous work, generative PersonaChat models focus on dialogue systems that are meant to be engaging, and do not exclude subjective or invented personal information. Existing datasets could be appropriate training resources for such an informative dialogue agent, however, often contain utterances with varying conversation styles and intents. For instance, in Figure 1, shows an example conversation excerpt from the Wizard of Wikipedia training set. Because of this mix of conversation styles, it is vital to inspect the faithfulness of produced utterances. We use an automatic evaluation framework that includes BLEU along with an ablation study on three vital metrics for estimating whether a response (1) written in objective voice, (2) its content is derived from the evidence and (3) entailed by grounding evidence. Human evaluation survey is also conducted on various pertinent metrics such as fluency, relevance, faithfulness and objectivity.

Across both human and automatic metrics, results from the analysis show the low accuracies towards a ‘faithful’ generated response. Collecting new datasets where the responses are more explicitly constrained by the evidence is expensive and challenging. The question that now arises is, “Can we use controllable features at generation time to disentangle these conversational styles within the data?” An alternative, yet straightforward approach is adding features to control for support given the grounding document, and present a resampling technique that satisfies faithfulness metrics at decoding time, avoiding content "hallucination" as much as possible. Hallucinations are defined here as any information that is neither inferable from nor directly stated by external documents. This research conveys that control codes help increase faithfulness, especially when used together with resampling, with an average increase of 25% in accuracy which correlates with the human judgements.

This work states that before applying these models, evidence sources must be ensured to be reliable and unbiased. An open question for the readers to take away now is how to resolve the cases where there need to be more reasoning or deeper inference.